The aim of this project is to experiment with a self-made USB stereo vision camera system to generate a depth-map image of an outdoor urban area (actually a garden with obstacles) to find out if such a system is suitable for obstacle detection for a robotic mower. The USB camera I did take (Typhoon Easycam 1.3 MPix) is very low priced (~12 EUR) and this might be the cheapest stereo system you can get.

For the software, the following steps are involved for the stereo vision system:

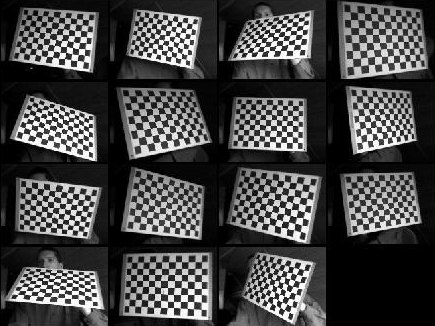

1. Calibrate the stereo USB cameras with the MATLAB Camera Calibration Toolbox to determine the internal and external camera paramters. The following picture shows the snapshot images (left camera) used for stereo camera calibration.

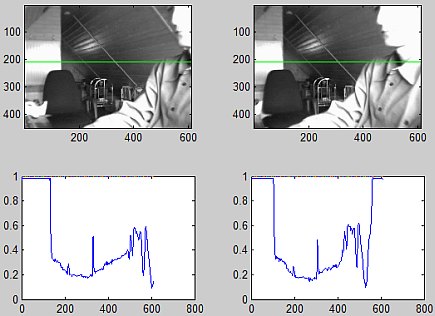

2. Use these camera paramters to generate rectified images for left and right camera images, so that the horizontal pixel lines of both cameras contain the same obstacle pixels. Again, the mentioned calibration toolbox was helpful to complete this task since rectification is included.3. Find some algorithm to find correlations of pixels on left and right images for each horizontal pixel line.This is the key element of a stereo vision system. There are algorithms that produce accurate results and they tend to be slow and often are not suitable for realtime applications. For my first tests, I did experiment with the MATLAB code for dense and stereo matching and dense optical flow of Abhijit S. Ogale and Justin Domke whose great work is available as open-source C++ library (OpenVis3D). Running time for my test image was about 4 seconds (1.3 GHz Pentium PC).4. Compute the disparity map based on correclated pixels.My test image:

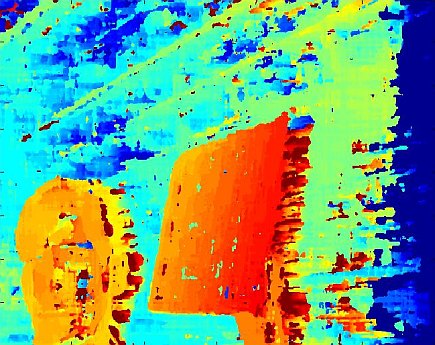

The disparity map generated (from high disparity on red pixels to low disparity on blue pixels):

The results are already impressive – future plans involve finding faster algorithms, maybe some idea that solves the problem in another way. Finding a quick way to find matches (from left to right) in intervals between the left and right intensity scan lines for each horinzontal pixel line could be a solution, although it might always be too slow for realtime applications.

Update (06-17-2009): I have been asked several times now how exactly all steps are performed. So, here are the detailed steps:

- Learn how to use the calibration toolbox using one camera first. I don’t know how to calibrate the stereo-camera system without the chessbord image (anyone knows?), however it is very easy to create this chessboard image – download the .PDF file, print it out (measure the box distances, vertical and horizontal line distances in each box should be the same), and stick it onto a solid board. Calibrate your stereo-camera system to compute your camera parameters. A correct calibration is absolutely necessary for the later correlation computation. Also, check that the computed pixel reprojection error after calibration is not too high (I think mine was < 1.0). After calibration, you’ll have a file “Calib_Results_stereo.mat” under the snapshots folder. This file contains the computed camera paramters.

- Now comes the tricky part 😉 – In your working stereo camera system, for each two camera frames you capture (left and right), you need to rectify them using the Camera Calibration Toolbox. You could do this using the function ‘rectify_stereo_pair.m’ – unfortuneately, the toolbox has no function to compute the rectified images in memory, so I did modify it – my function as well as my project (stereocam.m) is attached.Call this at your program start somewhere:

rectify_init

This will read in the camera parameters for your calibrated system (snapshots/Calib_Results_stereo.mat) and finally calculate matrices used for the rectification. - Capture your two camera images (left and right).

- Convert them to grayscale if they are color:

imL=rgb2gray(imL)

imR=rbg2gray(imR) - Convert the image pixel values into double format if your pixel values are integers:

imL=double(imL)

imR=double(imR) - Rectify the images:

[imL, imR] = rectify(imL, imR) - For better disparity maps, crop the rectified images, so that any white area (due to rotation) is cropped from the images (find out the cropping sizes by trial-and-error):

imL=imcrop(imL, [15,15, 610,450]

imR=imcrop(imR, [15,15, 610,450] - The rectified and cropped images can now be used to calculate the disparity map. In my latest code, I did use the ‘Single Matching Phase (SMP)’ algorithm from Stefano, Marchionni, Mattoccia (Image and Computer Vision, Vol. 22, No .12):

[dmap]=VsaStereoMatlab(imL’, imR’, 0, Dr, radius, sigma, subpixel, match) - Do any computations with returned depth map, visualize it etc.

And finally, here’s the Matlab code of my project (without the SMP code).

As far as i know, bouguet’s calibration box could rectify images, but the image should include calibration panel (the chessboard image). so, how do you rectify image pairs without chessboard?

i’ve done this with steps of:

1. computing F (harris + ransac);

2. calibrating camera to get K (bouguest’s tool box);

3. computing E with F and K, so both of the two cameras’ matrix (P1 and P2);

4. rectify image with A. Fusiello’s method(http://profs.sci.univr.it/~fusiello/demo/rect/);

but my rectification result is not so good, about 10 pixels error would be occur in 700*700 image paires.

what about ur opinion about this problem?

hello

thank you for your helpful post

i am doing the same project u did with the same tools

i need to get a real distances for objects

1- i made the calibration but i can’t get b baseline distance between the 2 cameras

2- how can i rectify images using matlab toolbox when it is not chessboard image

3- i got a disparity map from openvis3d

but what can i do next

how can it get from it the correspondence

thank you

Due to your questions, I have added more details on my project steps to this blog. I hope they are helpful 🙂 Cheers, Alexander

Hello Alexander ,

Thank you really for replying , that helped me a lot …………

I am trying to code my graduation project that will be handled at 9/7 🙁

It has an objective to help blind people as a real time system to avoid obstacles and so

that what i did :

1- Calibrated the 2 cameras (without cameras i used the images from the toolbox till i can get my cameras) using Matlab toolbox that was fine

2- Rectified the images , i got the “Calibrating_results_stereo” calculated new parameters and rectified the 2 images

3- Croped the images (that what i did today 🙂 )

4- i can’t find “Single Matching Phase (SMP) algorithm” on the internet i can’t find the source code

I was having the intendence to work with (openvis3d)

the code example in the openvis3d for the matlab was

minshift = 5;

maxshift = 15;

[bestshiftsL, occlL, bestshiftsR, occlR] = OvStereoMatlab(i1, i2, minshift, maxshift);

1- from where i can get minshift and maxshift

2- bestshftsL is it the shift of pixels of left image taking the right as reference ans bestshiftR is the opposite ? is that the disparity map ?

for your library from where i can get

radius = 7; % window radius (4-7)

Dr = 96; % Disparity range

subpixel = 8; % Subpixel factor

sigma = 0; % set min variance

match = 1024; % fixed parameter

5- in the end i must do the triangulation (i hope i can reach that)

Xl =f * ((x+b/2)/(f-z))

from where i can get b ——> baseline distance , i think its somewhere in the calibration parameters

Thank you really for your help

i am very grateful

Maria Fayed

I’ve been trying to find the SMP code you mentioned for finding the correspondence between the images. I assume you meant the Journal was “Image and Vision Computing”, article “A fast based stereo matching algorithm”. I was hoping you could provide the SMP code, or point me in the direction of whoever I could get it from.

Thanks

HELLO again 🙂

thanks for your help i have accomplished my calibration and rectification

but in the disparity map step i found many difficulties

1-i tried to use openvis3d the result are fantastic for its images when it comes to mine …. its aweful

2-i tried to use Shawn Lankton model it give good results for its images too but not for mine …. also it doesn’t consider the rectification so the input images must be RGB , mine after rectification are gray scale

how can i calculate the maximum shift for the images ?

thank you a lot

i think the maximum shift is the space between cameras , is that right ?

Hello Maria,

Sorry for answering so late to you- Let me try to answer you questions 🙂

1. Correspondence problem. For every point in one image find out the correspondent point on the other and compute the disparity of these points. In the disparity map, each pixel value is that disparity (shift) of the corresponding points. The higher the shift, the lower the pixel (depth) of that corresponding pixel. The higher the maximum possible shift, the more computation is needed.

Before you try out your stereo system live, verify that all parts of them are working correctly. Verify that your calibration was successful by using an arbitrary rectified pair of your calibration images (the left+right chessboard images) as input to your stereo matching algorithm. This should give you a good result (like mine as shown in the depth image shown in my blog article above). If it does not work, then your calibration was not successful and you need to recalibrate.

After this works well, go live with your cameras, take a pair of left and right frames, recitfy them and compare both rectified images visually. If your rectification was successful, all pixels belonging to the same object in your image should be located on the same scanline. Have a look at the comparism of my left and right recitifed images at my blog article above. One scanline is marked in green and the left image could be transformed into the right image just by moving the individual objects horizontally. The vertical orientation of the objects in both images is always identical. That is very important for a good rectification result.

Moving problem: If you intend to move your stereo camera during capturing, you’ll need to ensure that the frames of right and left camera are captured at exactly the same time. A low-cost system might not solve this problem, better cameras have a trigger button to activate capturing of both cameras at the same time.

Also, the system might not work when the left camera gets more or less light than the right camera (due to the small shift in the environment) – Then one image is darker or lighter than the other which makes stereo matching more difficult.

And last but not least, both cameras should be exactly the same 🙂

2. Triangulation. Given the disparity map, the focal distance of the two cameras and the geometry of the stereo setting (relative positions of the camera) compute (X,Y,Z) coordinates of all points in the images.

I hope my answers are helpful 🙂

Alexander

This is an interesting project. I heard that they are using stereo cameras in sign language to shape avatars hands. read here: http://www.wordsign.org/Technology.html

ONLINE CASINO – $ 1000 WELCOME BONUS!

casino, online casinos, internet casino, online casino, gamble, gambling, slots, poker, bingo, video poker, sweepstakes, keno, craps, roulette, blackjack, win, winner, games, free games online, free online casino, free games, play video poker, links, casino portal, internet casino, gaming, internet gambling, betting, directory, gambling links, portal, online games, play blackjack, play games, slots online, sweepstakes, sweepstakes newsletter, book, magazines, movies, software

http://kl.am/6NEs

PREMIER ONLINE STORE FOR POPULAR SOFTWARE

We do guarantee that all programs are 100% fully working OEM versions – no demos or trial versions

SAVE MONEY TOGETHER WITH US

http://www.x.se/rm9y

Hello Alexander ,

It’s very helpful, and now I can get good rectified stereo images, thanks a lot, but I just wonder how can I get the SMP code, from where?

Hey Alexander!

I am very appreciate for what you’ve done here. I am writing my thesis about this subject, and would really help me a lot if you could tell me something about that Single Matching Phase algorithm, cause I’ve been having hard time figuring out from the source. Although, if it may be possible to use some other algorithm for my real-time stereo system, it would be on option for me as well. I just hope you can give me a heads up about this matter.

Thanks a lot and keep up the good work,

Tamás

Welche enthält sehr interessanter Artikel. Kaum zu warten, um etwas Neues zu schreiben. Herzlichen Glückwunsch.

hello

I work in the field of localization of mobile robots using stereo vision

I need image rectification plz send my rectification code

thx

hi i am working on project related to stereo vision to avoid obstacles and i m trying to use shawn lankton code for disparity n depth estimation but its not giving me good results can any one plzz help me out how could i improve the performance for disparity its giving me bad resultss .

i hav tried many changes in camera callibration and tried again again and again but i couldnt get good results .

Thank you so much for this! I’ve been trying to hack the same code from the Calibration Toolbox to work with web cams, but I missed the bit where you had to convert the image to a double. All working now, thanks!

One thing worth noting for anyone else trying this, is that if you call:

obj.ReturnedColorSpace = ‘grayscale’;

for each videoinput object, ie, each camera, at the start of the program, you don’t need to convert each captured image to grayscale. This saves a bit of processing time.

One question, if you can remember so far back, is how did you manage to read in from two identical cameras? I had problems when I tried to do this, as when I started the second camera, the driver was being used for the first!

Hi I am asking if you can help me to write a matlab steps to capture still images from t o webcams at the same time, Best regards.

Hi I am asking if you can help me to write a matlab steps to capture still images from two webcams at the same time, Best regards.

hi

i need the calibration stereo vision in open CV

can you sent code calibration?

i use 2 webcam in the project

pleas, help me!

tanks