So you want to map your world in 3D (aka ‘mapping’), and at the same time track your 3D position in it (aka ‘localization’)?

Ideas for outdoor SLAM:

a) passive RGB (monochrome camera) or RGBD (stereo-camera) devices

b) active RGBD (3D camera) or 3D Lidar devices

General SLAM approach:

1. Estimate visual odometry (2D/3D) using monochrome/stereo/depth images

2. Scan matching, e.g. ICP (iterative closest point),

2. Loop-closure using probability methods (e.g. OpenFABMAP)

A) Using a photo camera (non-realtime, realtime?)

-visual odometry (e.g. Google Tango)

-multi-view stereo

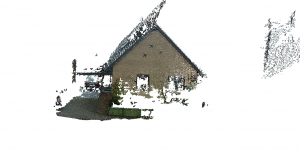

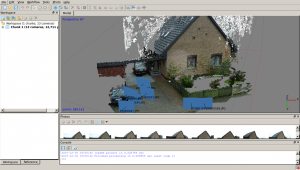

1. Make photos of your scene at different positions (I’m using a Panasonic DMC-TZ8 digicam).

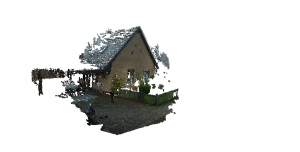

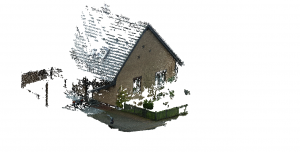

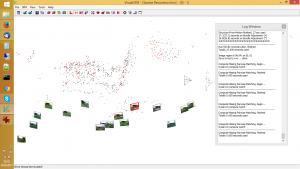

2. Calculate sparse point cloud (=points used for the matching) (OpenMVG, …)

3. Reconstruct dense point cloud (=triangulation of all points) (pmvs2, …)

4. Visualize dense point cloud (MeshLab, …)

5. Optional: make new photo at arbitrary position and use same principles to estimate actual camera position!

VisualSFM (Structure from motion)

B) Using a 3D camera (realtime)

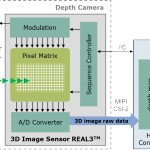

The camera sends out an IR image (IR projector) and at the same time takes IR images (IR camera) to measure the distance (depth) for each pixel (RGBD). Robots can build up a map of their surroundings very quickly as the distance calculation is simple (only little computational power is used).

- Google Tango phone (e.g. Phab2PRO):

OmniVision OV7251 100 fps global shutter fish eye camera

Infineon/PMD ToF pixel sensor IRS1645C – 224×172 pixel (38k)

(source: Infineon)

https://www.youtube.com/watch?v=ieqaCv9OEBo

(can broadcast Google Tango position data to external devices using TangoAnywhere) - Asus Xtion Pro, Kinect 1.0: structured light and triangulation

(installation NOTE for new Asus Xtion Pro:

sudo apt-get install --reinstall libopenni-sensor-primesense0) - Creative Senz3D/Intel (DepthSense 325), Kinect 2.0, Google Tango: pulsed light and time-of-flight measurement

- Do-It-Yourself (3D HD webcam)

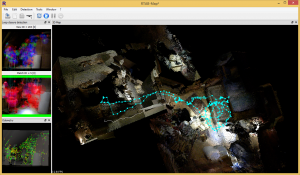

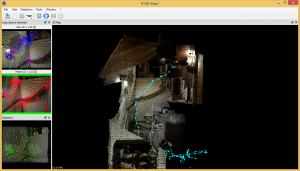

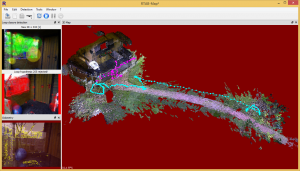

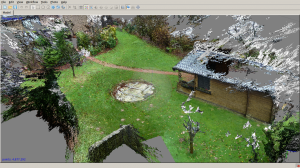

Realtime SLAM: RTABMap

This software can map your 3D world and track your position in it. You can find ready Windows/Mac executables for download here.

Examples:

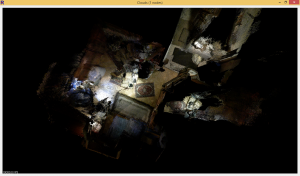

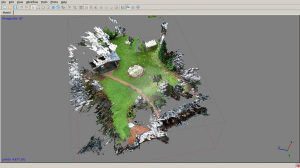

Two rooms 3D reconstruction (aka ‘mapping’)

Two rooms 3D position tracking (aka ‘localization’)

Stairs 3D reconstruction/tracking

Outdoor 3D mapping/tracking

NOTE: The used 3D camera doesn’t work in sun-light (cannot measure depth). Even if there is depth information, RTABMap sometimes has difficulties to find ‘features’ in the lawn images, so actually it’s difficult to map the lawn without obstacles.

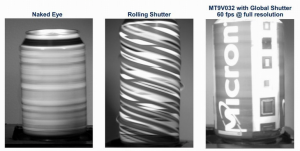

C) Using a global shutter camera (realtime)

As opposed to ordinary low cost USB cameras, in a global shutter camera, all frame pixels are taken at the exactly same time. This is required for realtime monocular/stereo SLAM operation (comparing consecutive image frames for depth estimation).

60fps global shutter rotating object example (source: Micron Technology, MT9V032)

Low cost global shutter cameras:

- Alptina MT9V034 global shutter WVGA CMOS sensor (monochrome), 60 fps, USB 3.0, 1/3″, 0.4 MP, resolution 752×480, S-mount lense (M12x0.5), approx. 199 USD: LI-USB30-V034M, mvBlueFOX-MLC200wG

sensor height (w’): 4.51mm

sensor width (h’): 2.88mm

sensor diameter (d’): 5.35mm - …

Realtime SLAM: LSD-SLAM, omni-lsdslam, …

D) Using a stereo-camera

For realtime operation, the stereo-camera must use a global shutter (see above).

- DUO mini – USB stereo camera with global shutter, approx. 275 EUR

- ZED Stereo Camera – synchronized rolling shutter, approx. 450 USD

Realtime outdoor SLAM:

E) Using 360 degree camera

USB camera (Trust eLight HD 720p) and BubbleScope 360 degree lens attachment:

Video (ratslam outdoor test)

Video (feature points)

F) Using a 2D Lidar

360 degree LidarLite v2

G) Using a 3D Lidar

The world is waiting for the affordable 3D LIDAR…

Further links

SLAM benchmark — http://www.cvlibs.net/datasets/kitti/eval_odometry.phpPCL (PointCloudLibrary) — http://pointclouds.org/documentation/tutorials/

Another stereo vision camera from e-con Systems. Tara – USB stereo camera based on OnSemi imaging MT9V024 sensor. Used in 3D stereo vision camera applications like Depth Sensing, Disparity Map, Point Cloud, etc.

Tara 3D Stereo Camera now supports Robot Operating System(ROS)

https://github.com/dilipkumar25/see3cam

Zed camera looks very for environment reconstruction but I wonder It is Ok to map positions of around objects. Some objects that are small size or lack visual features ( color) with back ground, camera still is ok to detect them?