Endlich mal wieder ein kleines Projekt, welches viele Dinge der Informatik miteinander verbindet: Servos, Mikrocontroller, USB-Kamera, Mikrocontroller <-> PC-Kommunikation, HTTP-Server, Javascript, AJAX, Python …

Aber jetzt erstmal im Detail:

Wie der Titel schon andeutet, geht es darum, eine USB-Webcam, welche auf zwei Servos montiert wurde via einem Web-Interface fernzusteuern – und natürlich das Bild der Kamera zu übertragen.

Das Projekt ist ähnlich dem Projekt von Tobias Weis – allerdings wird hier Windows und als Skriptsprache Python (statt Linux und PHP) verwendet.

Funktionsweise

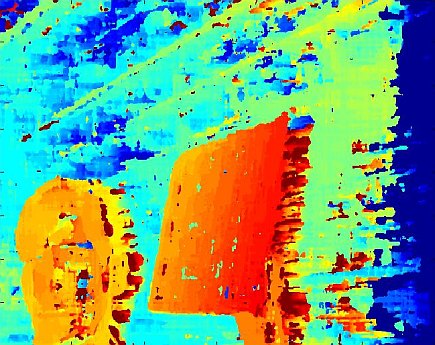

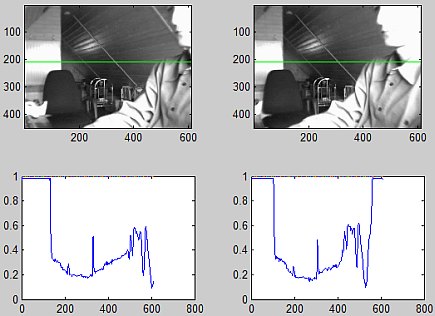

Die Servos werden über Pulsweitenmodulation (PWM) vom Mikrocontroller angesteuert, der Mikrocontroller erhält die Steuerbefehle via RS232-Schnittstelle (RS232 through USB). Ein auf dem PC laufender und in Python geschriebener Web-Server liest regelmäßig Bilder von der USB-Kamera, nimmt Befehle vom Web-Client entgegen (Kamera nach rech/links/oben/unten) und schickt diese Befehle dann an den Mikrocontroller.

Benötigte Hardware

- Mikrocontroller Atmel ATMEGA8L (ich verwende das myAVR Board MK2 USB von myAVR) – dieses Board enthält den Mikrocontroller sowie einen Programmer zum Übertragen der Software in den Mikrocontroller via USB.

- 2 handelsübliche Servos (werden im Modellbau eingesetzt und gibt es teilweise recht günstig bei eBay – ansonsten beim Modellbauer, Conrad, …)

- USB-Webcam (gibt’s überall)

- Ein Netzteil (5V, ca. 1A) zur Energieversorgung der Servos

Benötigte Software

- Atmel AVR Studio 4 (kann man bei Atmel herunterladen) – zum Kompilieren/Übertragen der Mikrocontrollersoftware

- Python 2.6 – Skriptspraceh zum Ausführen des Web-Servers, Kamera-Zugriff, Mikrocontroller-Kommunikation etc.

- Python Windows Extensions – wird für von der Universal Serial Port Python Library benötigt

- Python Imaging Library (PIL) – wird für die Python VideoCapture Library benötigt

Schritt 1: Servos montieren und mit Mikrocontroller verbinden

Den ersten Servo auf ein Brett fixieren, den zweiten Servo auf den ersten montieren und die Kamera auf den zweiten Servo befestigen. Dann die Steuerleitungen der Servos mit den Atmel Pins PB1 (OC1A) und PB2 (OC1B) verbinden. Zuletzt die +5V und Masse-Leitung der Servos mit dem externen Netzteil verbinden und die Masse-Leitung des externen Netzteils mit der Masse des Atmels verbinden (siehe auch das Schaltbild von Tobias).

Schritt 2: Mikrocontroller programmieren

Als nächstes wird der Atmel-Mikrocontroller programmiert. Zunächst sicherstellen dass die Fuse Bits des Atmels so eingestellt sind, dass dieser mit dem externen 3.6864 Mhz Quartz arbeitet (wichtig für die RS232 Kommunikation), z.B. mit dem Tool AVROSPII. Dann die Mikrocontroller-Software (avr/test2.c bzw. Projektdatei avr/test2.aps) in den Atmel mit AVR Studio übertragen (Build->Build und danach Tool-AVR Prog). Falls das myAVR-Board nicht gefunden wird, überprüfen ob COM1 oder COM2 für den USB-Treiber (->Gerätemanager) verwendet wird.

Nach erfolgereicher Übertragung der Mikrocontroller-Software kann man die Servos mit der Batch-Datei (avr/term.bat) austesten, welche ein RS232-Terminal startet. Durch Drücken der Tasten 1, 2, 3 oder 4 kann man die Servos steuern (rechts/links/oben/unten).

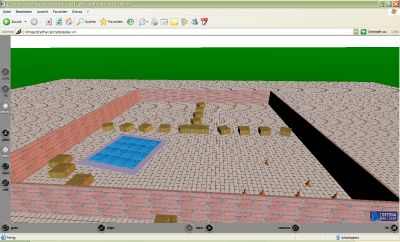

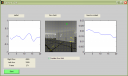

Schritt 3: Web-Server starten

Die WebCam mit dem PC verbinden. Dann den Web-Server starten (im Verzeichnis control ausführen: python start.py). Nach ein paar Sekunden läuft der Web-Server dann auf Port 8080. Im Web-Browser gibt man also “http://localhost:8080” als URL ein und mit ein bisschen Glück sieht man das Web-Interface der Steuerungssoftware.