Monthly Archives: January 2009

Optical flow based robot obstacle avoidance with Matlab

This is the result of a project where a virtual robot avoids obstacles in a virtual environment without knowing the environment – the robot navigates autonomously, only by analysing it’s virtual camera view.

In detail, this example project shows:

1. How to create a virtual environment for a virtual robot and display the robot’s camera view

2. Capture the robot’s camera view for analysing

3. Compute the optical flow field of the camera view

4. Estimate Focus of expansion (FOE) and Time-to-contact (TTC)

5. Detect obstacles and make a balance decision (turn robot right/turn left)

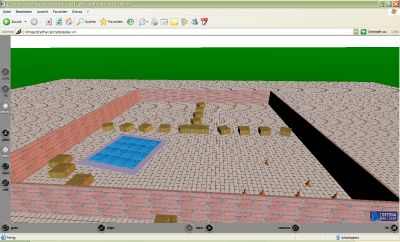

Matlab’s Virtual Reality toolbox makes it possible to not only visualize a virtual world, but also capture it into an image from a specified position, orientation and rotation. The virtual world was created in VRML with a plain text editor and it can be viewed in your internet browser if you have installed a VRML viewer (you can install one here).

(Click here to view the VRML file with your VRML viewer) .

For calculating the optical view field of two successive camera images, I used a C optimized version of Horn and Schunk’s optical flow algorithm – see here for details).

Based on this optical flow field, the flow magnitudes of right and left half of each image is calculated. If the sum of the flow magnitudes of the view reaches a certain threshold, it is assumed there is an obstacle in front of the robot. Then the computed flow magnitude of right and left half image is used to formulate a balance strategy: if the right flow is larger than the left flow, the robot turns left – otherwise it turns right.

Robot’s camera view at the same time: