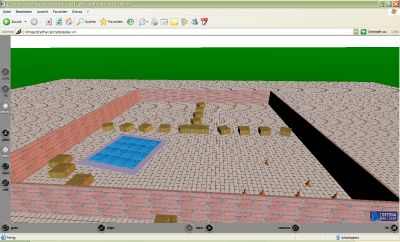

This is the result of a project where a virtual robot avoids obstacles in a virtual environment without knowing the environment – the robot navigates autonomously, only by analysing it’s virtual camera view.

In detail, this example project shows:

1. How to create a virtual environment for a virtual robot and display the robot’s camera view

2. Capture the robot’s camera view for analysing

3. Compute the optical flow field of the camera view

4. Estimate Focus of expansion (FOE) and Time-to-contact (TTC)

5. Detect obstacles and make a balance decision (turn robot right/turn left)

Matlab’s Virtual Reality toolbox makes it possible to not only visualize a virtual world, but also capture it into an image from a specified position, orientation and rotation. The virtual world was created in VRML with a plain text editor and it can be viewed in your internet browser if you have installed a VRML viewer (you can install one here).

(Click here to view the VRML file with your VRML viewer) .

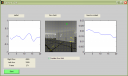

For calculating the optical view field of two successive camera images, I used a C optimized version of Horn and Schunk’s optical flow algorithm – see here for details).

Based on this optical flow field, the flow magnitudes of right and left half of each image is calculated. If the sum of the flow magnitudes of the view reaches a certain threshold, it is assumed there is an obstacle in front of the robot. Then the computed flow magnitude of right and left half image is used to formulate a balance strategy: if the right flow is larger than the left flow, the robot turns left – otherwise it turns right.

Robot’s camera view at the same time:

It a very nice application of optical flow. I’m interested in how did you compute the TTC (time to contact). You used a formula 1/sum(Vmag(:))*100. Can you please lighten me up and can you please tell me what means the result (in the plot). Thank you!

Hi, I have the same question. What mean 1/sum(Vmag(:))*100, and how you obtain that. Do you have any paper for this application? Please answer my. Thank you!

Assuming that the robot does not change its direction, the sum of the optical flow mangitudes increase while approaching an obstacle. The time-to-contact (TTC) normally is a measure for the time (here: number of frames) until that contact happens. I wasn’t able to correctly figure out a correct TTC formula, so I played with the magnitude numbers, until I had something that looked like a TTC value as a result.

Someone else wrote to me that he did find a better TTC formula – he wrote:

One way to compute the TTC wold be like this:

– compute the distance from the focus of expansion to the center of the image: D_foe = abs(x_cim – x_foe) + abs(y_cim – y_foe);

– compute the TTC by substituting the value 100 with D_foe: ttc = D_foe/sum(Vmag(:));

This works also fine and this is how I found out what you meant by the value 100. 🙂

Great application! Thanks for sharing the code.

Will it be ok to use your code in an obstacle avoidance application at an undergraduate level research project?

Thanks a lot.

there are some code segments which the matlab compiler is showing errors..can u tell me the exact version of matlab in which should i run the code…thanks alot

thnks man ,thnx a lot .It ‘s working n it’s working n working wohooooooo

Amazing work,but i come up with some problem to run it

in the matlab it says:

Undefined function or method ‘OpticalFlowMatlab’ for input arguments of type ‘double’.

Error in ==> ofnav>startstop at 136

[Vx, Vy] = OpticalFlowMatlab(glob.images, 1, 4); %% images, alpha, iterations

??? Error while evaluating uicontrol Callback

thankyou for answer me

Superb work.

If you are interesting following research papers use similar techniques and one of them discuss about a real robot controlled by fuzzy logic and optical flow

https://www.researchgate.net/publication/270339912_Simulation_of_optical_flow_and_fuzzy_based_obstacle_avoidance_system_for_mobile_robots

https://www.researchgate.net/publication/233855641_An_autonomous_robot_navigation_system_based_on_optical_flow?ev=prf_pub

Interesting project. The link to the Matlab code seems to be broken (I realise that this is an old project), has this code been moved? or would it be possible to send it to me, please?

I am interested in how you moved the camera around in the code and also how you output the virtual reality camera footage for vision processing.

Thanks.

Hello, please try this link: https://de.mathworks.com/matlabcentral/fileexchange/22713-optical-flow-based-robot-obstacle-avoidance-with-matlab

Hi,

Thanks for sending me the link, this has helped me to learn a lot!

I seem to get some errors for which I can’t find a solution, would you be able to help please. When I run “ofnav” I am presented with the GUI, when I press the start button I get the following errors (I am using Windows 7 and Matlab 2016b):

Error using vrfigcapture (line 13)

Invalid function name.

Error in ofnav>startstop (line 129)

Error while evaluating UIControl Callback

Also, I am working on a PhD project and your project is related. Would you mind if in the future I reused some parts of your code (of course with credit and references given)? This will save me from repeating some of the ground work that has already been done, allowing me to focus on novel contributions.

Thanks

Hello,

Could you try to use function ‘fcapture’ (as it is in the .m file) instead? I’m not sure why the function is named ‘vrfigcapture’ (sorry, it’s too long ago ;)).

Yes, you can use parts of it for your project as long as you credit and reference my work.

Thanks,

Alexander

I did notice that naming issue, but that doesn’t seem to solve it, I still get the error at line 13 of function:

xraw = vrsfunc(‘CaptureFigure’, handle);

I understand that this is an old project for you so I shall try and find the solution myself. The project has already helped me to learn a lot, thanks again for sharing.